Intro #

This week, Proton announced their large language model: Lumo. The announcement positions Lumo as a “private AI assistant” and continues:

“With no logs kept and every chat encrypted, Lumo keeps your conversations confidential and your data fully under your control - never shared, sold, or stolen.”

This sounds great at a surface level, but I’d like to discuss what I haven’t seen any other article or blog discuss yet: Large Language Models (LLMs) need the unencrypted messages to be able to process them, and the LLM’s output would be unencrypted when generated.

Lumo’s features #

Lumo’s announcement advertises the following features:

- No logs

“[…] Lumo doesn’t keep any logs of your conversations server side, and any chats you save can only be decrypted on your device.”

- Zero-access encryption

“Your chats are stored using our battle-tested zero-access encryption […]” (emphasis added)

- No data sharing

“[…] Lumo’s no logs and encrypted architecture ensure we don’t have data to share […]”

- Not used to train AI

“[…] Lumo doesn’t use your conversations or inputs to train the large language model.”

- Open

“Lumo is based upon open-source language models and operates from Proton’s European datacenters. […]”

All of these features are, frankly, great. Though it’s also important to understand what is not said. The zero-access encryption refers to “stored” chats, which begs the question of what happens to chats as they occur? Are they encrypted?

Encryption During Processing #

Lumo states that messages are encrypted before being sent to Proton servers, temporarily decrypted in a secure environment for the LLM to understand, and then Lumo’s response is encrypted prior to being sent back to you.

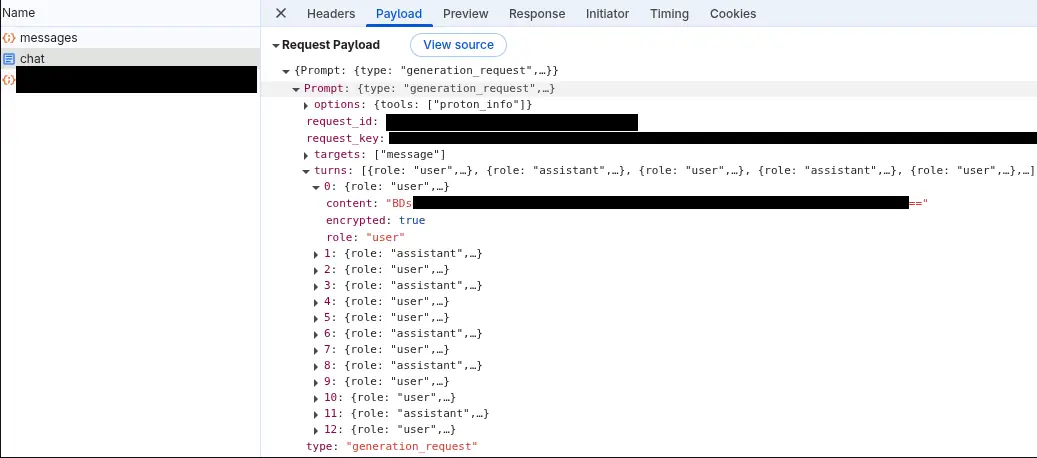

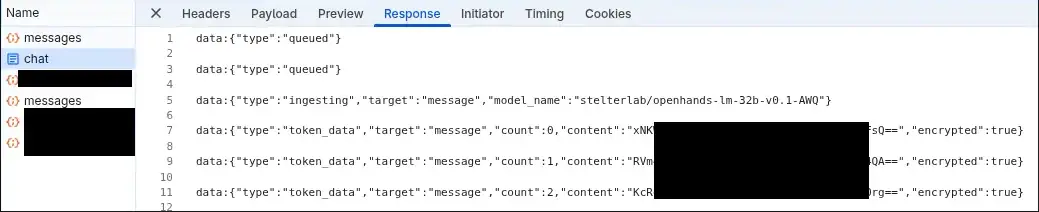

While I would take something like this coming from a LLM with a grain of salt, this did match up with what I observed in my initial conversations with Lumo. When I sent messages to Lumo I could see the individual tokens were bidirectionally client-side encrypted. This means that my messages to Proton were being encrypted on my device before being sent to Proton, and similarly the response I received back from Proton was encrypted prior to being sent to me. This is an extra step beyond what normally occurs with HTTPS communication and would further protect your conversation if it were to somehow be intercepted in transit.

Here is a message payload sent to Lumo, note that encrypted: true and the content is encrypted.

Similarly, this was the response received from Lumo, note that "encrypted": true and Lumo’s response to me was received as encrypted as well.

No logs, but context is resent #

Additionally, notice in the message payload sent to Lumo that it is structured as turns. This is because LLMs are stateless and need to have a context window to be able to understand other parts of the conversation. With Lumo you are encrypting the previous chat contents (both sides) and sending it to Lumo with each message to provide that context. Think of it like replying to an email chain. The user you’re responding to will be able to read your latest message as well as prior message history. Here that other user is Lumo and while there are “no logs”, you do inherently provide the latest portions of that conversation to Lumo with each interaction. I haven’t tested the max turns sent to Lumo but it self-reported that this would typically be 10-20 messages.

Is it safe? #

The answer to whether or not it is safe is “it depends”– It depends on your individual threat model and privacy needs. Overall, Proton’s Lumo is a step in the right direction if you are looking for a privacy focused LLM, and I appreciate Proton offering privacy respecting options to the extent they are able.

The description of Lumo does not mention utilizing Homomorphic encryption, which is a form of encryption that can be handled by computers without decrypting the contents first. If Lumo were utilizing homomorphic encryption, Lumo would likely be impractically slow for each interaction, and this encryption method would be mentioned as a feature. What we’re left with is knowing that Lumo does still need to be able to temporarily decrypt your messages in their “secure environment”, and then the response generated is unencrypted but is encrypted prior to being sent to you.

The decryption of messages is briefly mentioned by Lumo’s support article, which states:

“[…]In addition, we asymmetrically encrypt your prompts so only the Lumo GPU servers are able to decrypt them.”

This means that Lumo is not truly end-to-end encrypted in how we might expect their encrypted email service to work (where only the intended human users can decrypt the message). Proton could, theoretically, be compelled to capture new Lumo requests from a given user when those messages are decrypted by the Lumo GPU servers.

Alternatives #

An alternative would be to run a LLM locally which would eliminate third parties having access to your data. This carries its own caveats and risks, such as needing the hardware to run a LLM, and being the steward of your own data.

If you’re interested in running a local LLM then I’d suggest looking into Ollama.

Conclusion #

Overall, I am impressed with what Proton has done. The features Lumo is advertising are meaningful privacy improvements over the standard LLM practices. I was also impressed by the bidirectional client-side encryption approach compared to competitors who only use HTTPS without this added layer of protection for in-transit communication.

The point I wanted to make in this post is that the Lumo announcement was carefully phrased around encryption at rest, because:

- the messages sent to Lumo need to be able to be temporarily decrypted for Lumo to process them.

- Lumo’s response is generated as unencrypted text prior to be encrypted and sent back to you.

- portions of the conversation context (previous messages) get resent with each interaction.

The key distinction is that Lumo provides end-to-end encryption between you and the AI, rather than traditional human-to-human E2E encryption. While Proton advertises zero-access encryption for stored chats and claims no logs are kept, their AI assistant Lumo still requires decryption of messages for processing. The only way around a third party having potential access to your data would be to run a LLM locally.

Update: 5 Aug 2025 #

Proton has released a blog with more details on Lumo. The post confirms much of what was covered, such as:

- Proton explicitly acknowledges the E2E terminology distinction I made: “we acknowledge this is not the regular definition of end-to-end encryption, which is why we prefer to call it user-to-Lumo (U2L) encryption to avoid any misunderstanding”

- Homomorphic Encryption was impractical for real-time AI interactions

- The

encrypted: trueflags on messages to/from Lumo that I observed are confirmed to be AES encryption, part of an AES + PGP hybrid approach where AES handles message encryption for speed and PGP manages key exchange - Proton claims “no intermediate system inside Proton can read the message content” until it reaches the LLM server, which speaks to the protection during internal routing. However, this still confirms that Proton’s LLM servers can decrypt the messages for processing should that be a concern for a user.

- Proton has now used the term “bidirectional asymmetric encryption” to describe their system, which aligns with my observation of “bidirectional client-side encryption” - both describe encryption happening in both directions, though from different technical perspectives